Recommendations for running Bitbucket in AWS

This page provides general sizing and configuration recommendations for running self-managed Bitbucket Data Center and Bitbucket Server instances on Amazon Web Services.To get the best performance out of your Bitbucket deployment in AWS, it's important to not under-provision your instance's CPU, memory, or I/O resources. Note that the very smallest instance types provided by AWS do not meet Bitbucket's minimum hardware requirements and aren't recommended in production environments. If you don't provision sufficient resources for your workload, Bitbucket is likely to exhibit slow response times, display a Bitbucket Server is reaching resource limits banner, or fail to start altogether.

Recommended EC2 and EBS instance sizes

The following table lists the recommended EC2 and EBS configurations for operating a Bitbucket Server (standalone) or Bitbucket Data Center (clustered) instance under typical workloads.

To deploy an instance architected for both scale and resilience, we recommend deploying Bitbucket Data Center. The AWS Quick Start and associated CloudFormation template provides a set of recommended defaults, node size options and scaling parameters.

Bitbucket Server

| アクティブ ユーザー数 | EC2 インスタンス タイプ | EBS Optimized | EBS Volume type | IOPS |

|---|---|---|---|---|

0 – 250 | c3.large | いいえ | General Purpose (SSD) | N/A |

| 250 – 500 | c3.xlarge | はい | General Purpose (SSD) | N/A |

| 500 – 1000 | c3.2xlarge | はい | Provisioned IOPS | 500 – 1000 |

Bitbucket Data Center (cluster nodes)

| アクティブ ユーザー数 | EC2 インスタンス タイプ | 推奨されるノード数 |

|---|---|---|

0 – 250 | c3.large | 1-2* |

| 250 – 500 | c3.xlarge | 1-2* |

| 500 – 1000 | c3.2xlarge | 2 |

| 1000 – 大規模 | c3.4xlarge+ | 3+ |

* 高可用性のためには、最低でも 2 つ以上のクラスター ノードをデプロイすることをお勧めします。

Bitbucket Data Center (shared file server)

These recommendations assume a single EC2 instance with attached EBS volume acting as a shared NFS server for the cluster.

| アクティブ ユーザー数 | EC2 インスタンス タイプ | EBS Volume type | IOPS |

|---|---|---|---|

0 – 250 | m4.large | General Purpose (SSD) | N/A |

| 250 – 500 | m4.xlarge | General Purpose (SSD) | N/A |

| 500 – 1000 | m4.2xlarge | Provisioned IOPS | 500 – 1000 |

| 1000 – 大規模 | m4.4xlarge+ | Provisioned IOPS | 1000+ |

The Amazon Elastic File System (EFS) is not supported for Bitbucket's shared home directory due to poor performance of git operations.

See Amazon EC2 instance types, Amazon EBS–Optimized Instances, and Amazon EBS Volume Types for more information.

注意

In Bitbucket instances with high hosting workload, I/O performance is often the limiting factor. It's recommended that you pay particular attention to EBS volume options, especially the following:

- The size of an EBS volume also influences I/O performance. Larger EBS volumes generally have a larger slice of the available bandwidth and I/O operations per second (IOPS). A minimum of 100 GiB is recommended in production environments.

- The IOPS that can be sustained by General Purpose (SSD) volumes is limited by Amazon's I/O credits. If you exhaust your I/O credit balance, your IOPS will be limited to the baseline level. You should consider using a larger General Purpose (SSD) volume or switching to a Provisioned IOPS (SSD) volume. See Amazon EBS Volume Types for more information.

- New EBS volumes in particular have reduced performance the first time each block is accessed. See Pre-Warming Amazon EBS Volumes for more information.

The above recommendations are based on a typical workload with the specified number of active users. The resource requirements of an actual Bitbucket instance may vary markedly with a number of factors, including:

- The number of continuous integration servers cloning or fetching from Bitbucket Server: Bitbucket Server will use more resources if you have many build servers set to clone or fetch frequently from Bitbucket Server

- Whether continuous integration servers are using push mode notifications or polling repositories regularly to watch for updates

- Whether continuous integration servers are set to do full clones or shallow clones

- Whether the majority of traffic to Bitbucket Server is over HTTP, HTTPS, or SSH, and the encryption ciphers used

- The number and size of repositories: Bitbucket Server will use more resources when you work on many very large repositories

- The activity of your users: Bitbucket Server will use more resources if your users are actively using the Bitbucket Server web interface to browse, clone and push, and manipulate pull requests

- The number of open pull requests: Bitbucket Server will use more resources when there are many open pull requests, especially if they all target the same branch in a large, busy repository.

See Scaling Bitbucket Server and Scaling Bitbucket Server for Continuous Integration performance for more detailed information on Bitbucket Server resource requirements.

Other supported instance sizes

The following Amazon EC2 instances also meet or exceed Bitbucket Server's minimum hardware requirements. These instances provide different balances of CPU, memory, and I/O performance, and can cater for workloads that are more CPU-, memory-, or I/O-intensive than the typical.

| モデル | vCPU | メモリ (GiB) | インスタンス ストア (GB) | EBS | 専用 EBS スループット (Mbps) |

|---|---|---|---|---|---|

| c3.large | 2 | 3.75 | 2 x 16 SSD | - | - |

| c3.xlarge | 4 | 7.5 | 2 x 40 SSD | はい | - |

| c3.2xlarge | 8 | 15 | 2 x 80 SSD | はい | - |

| c3.4xlarge | 16 | 30 | 2 x 160 SSD | はい | - |

| c3.8xlarge | 32 | 60 | 2 x 320 SSD | - | - |

| c4.large | 2 | 3.75 | - | はい | 500 |

| c4.xlarge | 4 | 7.5 | - | はい | 750 |

| c4.2xlarge | 8 | 15 | - | はい | 1,000 |

| c4.4xlarge | 16 | 30 | - | はい | 2,000 |

| c4.8xlarge | 36 | 60 | - | はい | 4,000 |

| i2.xlarge | 4 | 30.5 | 1 x 800 SSD | はい | - |

| i2.2xlarge | 8 | 61 | 2 x 800 SSD | はい | - |

| i2.4xlarge | 16 | 122 | 4 x 800 SSD | はい | - |

| i2.8xlarge | 32 | 244 | 8 x 800 SSD | - | - |

| m3.large | 2 | 7.5 | 1 x 32 SSD | - | - |

| m3.xlarge | 4 | 15 | 2 x 40 SSD | はい | - |

| m3.2xlarge | 8 | 30 | 2 x 80 SSD | はい | - |

| m4.large | 2 | 8 | - | はい | 450 |

| m4.xlarge | 4 | 16 | - | はい | 750 |

| m4.2xlarge | 8 | 32 | - | はい | 1,000 |

| m4.4xlarge | 16 | 64 | - | はい | 2,000 |

| m4.10xlarge | 40 | 160 | - | はい | 4,000 |

| m4.16xlarge | 64 | 256 | - | はい | 10,000 |

| r3.large | 2 | 15.25 | 1 x 32 SSD | - | - |

| r3.xlarge | 4 | 30.5 | 1 x 80 SSD | はい | - |

| r3.2xlarge | 8 | 61 | 1 x 160 SSD | はい | - |

| r3.4xlarge | 16 | 122 | 1 x 320 SSD | はい | - |

| r3.8xlarge | 32 | 244 | 2 x 320 SSD | - | - |

| x1.32xlarge | 128 | 1,952 | 2 x 1,920 SSD | はい | 10,000 |

In all AWS instance types, Bitbucket Server only supports "large" and higher instances. "Micro", "small", and "medium" sized instances do not meet Bitbucket's minimum hardware requirements and aren't recommended in production environments.

Bitbucket does not support D2 instances, Burstable Performance (T2) Instances, or Previous Generation Instances.

In any instance type with available Instance Store device(s), a Bitbucket instance launched from the Bitbucket AMI will configure one Instance Store to contain Bitbucket Server's temporary files and caches. Instance Store can be faster than an EBS volume but the data doesn't persist if the instance is stopped or rebooted. Use of Instance Store can improve performance and reduce the load on EBS volumes. See Amazon EC2 Instance Store for more information.

Advanced: Monitoring Bitbucket to tune instance sizing

This section is for advanced users who wish to monitor the resource consumption of their instance and use this information to guide instance sizing. If performance at scale is a concern, we recommend deploying Bitbucket Data Center with elastic scaling, which alleviates the need to worry about how a single node can accomodate fluctuating or growing load. See the AWS Quick Start guide for Bitbucket Data Center for more details.

The above recommendations provide guidance for typical workloads. The resource consumption of every Bitbucket Server instance will vary with the mix of workload. The most reliable way to determine if your Bitbucket Server instance is under- or over-provisioned in AWS is to monitor its resource usage regularly with Amazon CloudWatch. This provides statistics on the actual amount of CPU, I/O, and network resources consumed by your Bitbucket Server instance.

The following simple example BASH script uses

to gather CPU, I/O, and network statistics and display them in a simple chart that can be used to guide your instance sizing decisions.

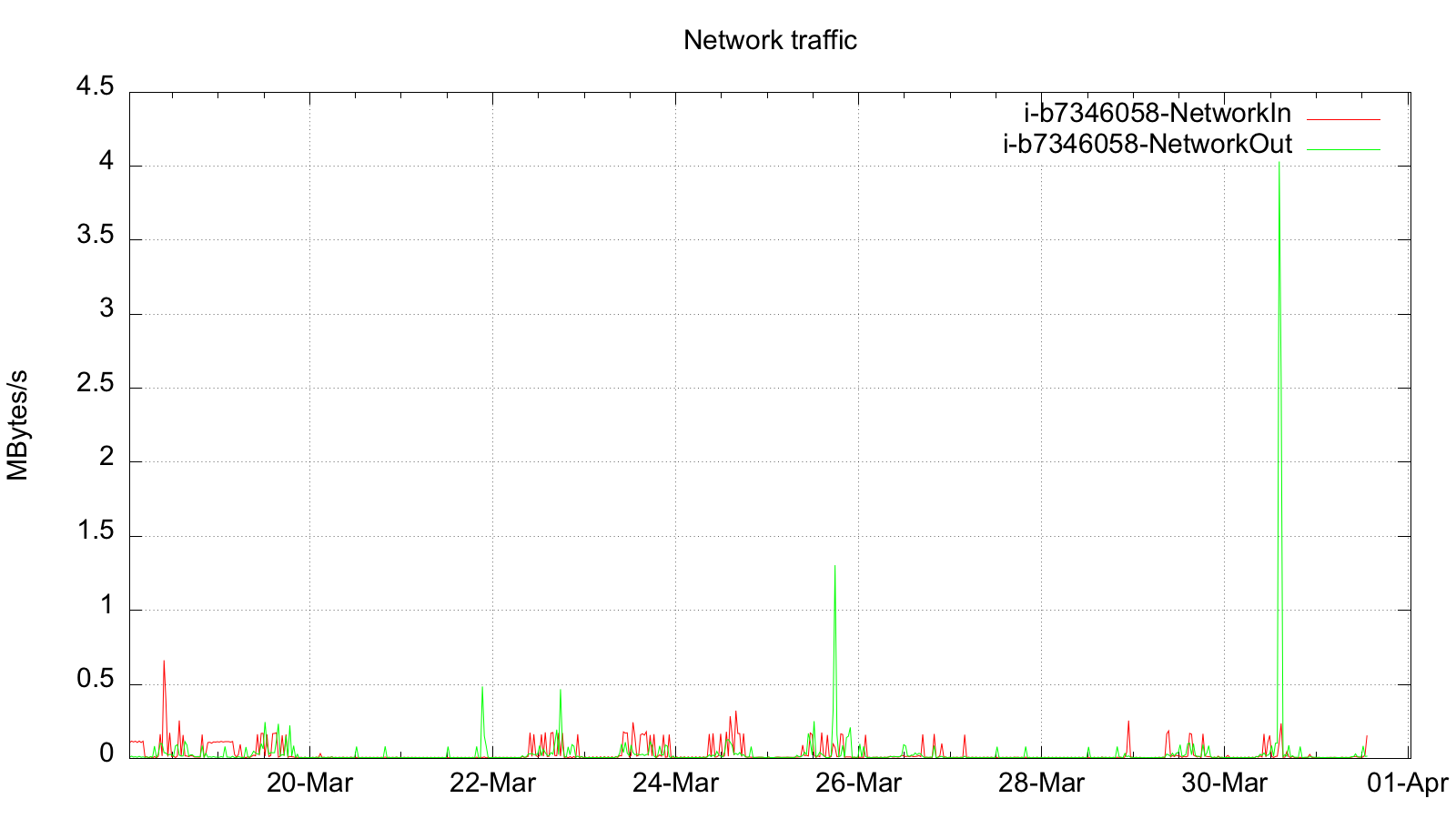

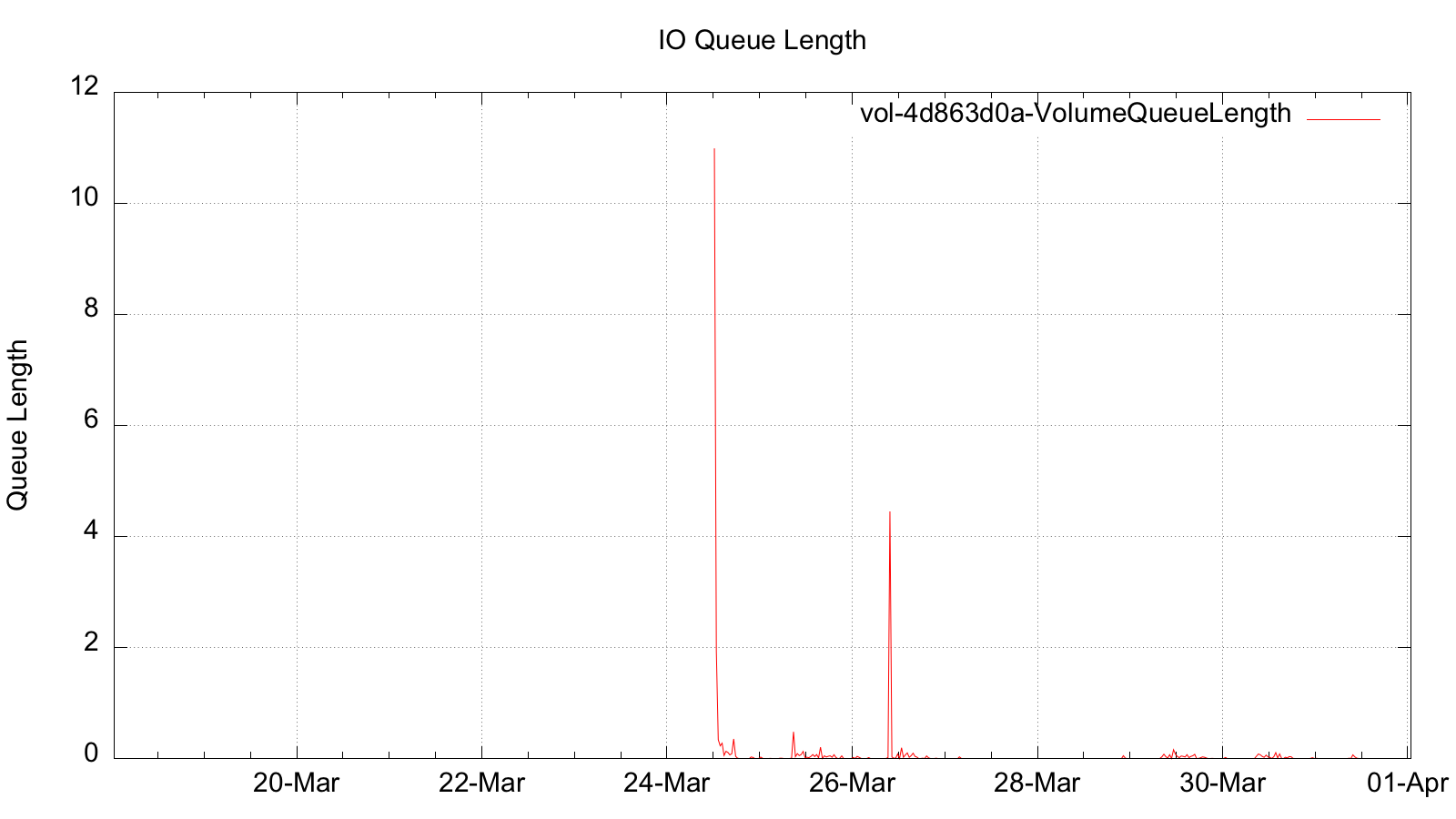

When run on a typical Bitbucket Server instance, this script produces charts such as the following:

You can use the information in charts such as this to decide whether CPU, network, or I/O resources are over- or under-provisioned in your instance.

If your instance is frequently saturating the maximum available CPU (taking into account the number of cores in your instance size), then this may indicate you need an EC2 instance with a larger CPU count. (Note that the CPU utilization reported by Amazon CloudWatch for smaller EC2 instance sizes may be influenced to some extent by the "noisy neighbor" phenomenon, if other tenants of the Amazon environment consume CPU cycles from the same physical hardware that your instance is running on.)

If your instance is frequently exceeding the IOPS available to your EBS volume and/or is frequently queuing I/O requests, then this may indicate you need to upgrade to an EBS optimized instance and/or increase the Provisioned IOPS on your EBS volume. See EBS Volume Types for more information.

If your instance is frequently limited by network traffic, then this may indicate you need to choose an EC2 instance with a larger available slice of network bandwidth.