Is there an easy way to archive a lot of issues?

プラットフォームについて: Server および Data Center のみ。この記事は、Server および Data Center プラットフォームのアトラシアン製品にのみ適用されます。

サーバー*製品のサポートは 2024 年 2 月 15 日に終了しました。サーバー製品を利用している場合は、アトラシアンのサーバー製品のサポート終了のお知らせページにて移行オプションをご確認ください。

*Fisheye および Crucible は除く

This page contains customizations/scripts that are out of scope for Atlassian Support. If there are any problems, Atlassian Support will not be able to help troubleshoot any problems related to the below scripts. These are provided to help provide examples and guidance on how you can create your own scripts. Please ensure to test in a non-production environment.

Limitations to archiving multiple issues

For now, the most common way of archiving multiple old issues at once is searching for issues and archiving them in bulk. However, this way has some limitations. They originate from the default search limit of 1000 issues per search, and to archive, for example, 1M issues, you need to manually repeat the task 1000 times. The bulk archiving operation itself is time-consuming, and it is pretty natural you are not going to repeat the same task 1000 times in a row.

At the same time, increasing this limit in an instance configuration isn’t an option especially for cross-locational instances with a lot of users using the instance from different locations around the world 24/7.

Solution for big instances

When you require to quickly archive more than 1k issues, you can do so using one of the REST API methods described below. The first one employs Python, and allows you to easily search and archive any number of issues. On the other hand, the curl method will search for issues and provide results in batches of 1k. The results can later be archived. It's a more time-consuming method but t helps you analyse how archiving works and, possibly, create your own script based on ours.

Archive more than 1k issues using the Python script

This is an easy way to archive a big number of issues. Using the script you’re not constrained by the limit of 1000 issues or you may choose not to have the upper limit altogether. The script first filters through the issues to pick the ones you want to archive and then contacts the endpoint to archive them. Naturally, if you pick a significant number of issues to archive, the script will take a while to execute however, you do not need to plan any downtime.

To archive issues using the python script, follow these steps:

1. Create the file containing the script body. For example, let’s call it jira_archive.py

2. Add the following to the already created file:

import requests

import getpass

import json

class MassArchive:

def __init__(self, address, name, password):

self.url_search = "%s/rest/api/2/search" % address

self.url_archive = "%s/rest/api/2/issue/archive" % address

self.url_config = "%s/rest/api/2/application-properties/advanced-settings" % address

self.auth_val = (name, password)

def search_issues(self, jql, limit):

session = requests.session()

issues_to_archive = set()

max_per_request = self.get_limit_per_request(session)

start_at = 0

# this loop gathers all issues that meet jql query until reaching specified limit

while len(issues_to_archive) < limit or limit == -1:

data = {'jql': jql, 'startAt': start_at, 'maxResults': limit, 'fields': ['key']}

resp = session.post(self.url_search, json=data, auth=self.auth_val, verify=False)

# issues are extracted from response.

# ':min(limit, limit - len(issues_to_archive))' avoids exceeding the limit.

if limit == -1 :

issues_ = json.loads(resp.text)['issues']

else:

issues_ = json.loads(resp.text)['issues'][:min(limit, limit - len(issues_to_archive))]

# issues keys are added to set of all issues that meet the jql query

for issue in issues_:

issues_to_archive.add(issue['key'])

# start_at is updated to fetch next issues

start_at += max_per_request

# if the number of issues from the response is lower than max_per_request,

# it means the end of search has been reached.

if len(issues_) < max_per_request:

break

return issues_to_archive

def get_limit_per_request(self, session):

print (self.url_config)

resp = session.get(self.url_config, auth=self.auth_val, verify=False)

return int(

list(filter(lambda row: row['id'] == "jira.search.views.default.max", json.loads(resp.text)))[0]['value'])

def archive_issues(self, jql, notifyUser, limit=-1):

session = requests.session()

issue_keys = list(self.search_issues(jql, limit))

response = session.post(self.url_archive, json=issue_keys, auth=self.auth_val, stream=True,

params={'notifyUsers': notifyUser}, verify=False)

# Print Issue that was processed

for line in response.iter_lines():

print('Issue %s is Archived' % line.decode("utf-8").split(',')[0])

def main():

url = input("url address: ")

name = input("username: ")

password = getpass.getpass("password: ")

jql_query = input("JQL query: ")

limit = input("limit (type -1 to archive all found): ")

notifyUser = input("notifyUser (True or False): ")

notifyUserParam = False

if notifyUser.lower() == "true":

notifyUserParam = True

elif notifyUser.lower() == "false":

notifyUserParam = False

else:

raise ValueError("notifyUser must be True or False")

MassArchive(url, name, password).archive_issues(jql_query, notifyUser, int(limit))

if __name__ == "__main__":

main()3. Run the script using Python. You will have to provide such information as the username, password, and the address of your Jira instance. You will be able to specify a JQL query and the limit of issues to archive.

python jira_archive.py | tee output.txt

tee output.txt is used for storing the output of the script to the output.txt file.

You can easily stop issue archiving by interrupting the streaming of results. Just use ctrl + c while execution. Bear in mind that the results of archiving are being streamed without waiting to acknowledge. It means that between the interruption on the client-side and the actual stopping of the archiving process still some issues will be archived depending on latency.

Archive in batches and learn archiving logic using curl

This script lets you archive issues in batches of one thousand. The script filters through the issues and comes up with the first 1000 that match your search criteria. You can store this output in a file and then immediately proceed to archive it, or adjust your search to present the next set of results, and only archive them once you have all the result sets. This method is more time consuming but exposes the logic of archiving and can help you build your own script based on ours.

To archive issues using curl, follow these steps:

Make sure that you have the permission to archive issues.

1. Create a file where your credentials will be stored. Do the following:

touch .netrc

chmod go= .netrc

2. Edit the file and enter the required information:

machine <host> login <login> password <password>例:

machine jira.company.com login admin password adminInstead of using the .netrc file, you can enter your username and password directly in the curl command, using -u <username>:<password> but it’s not recommended.

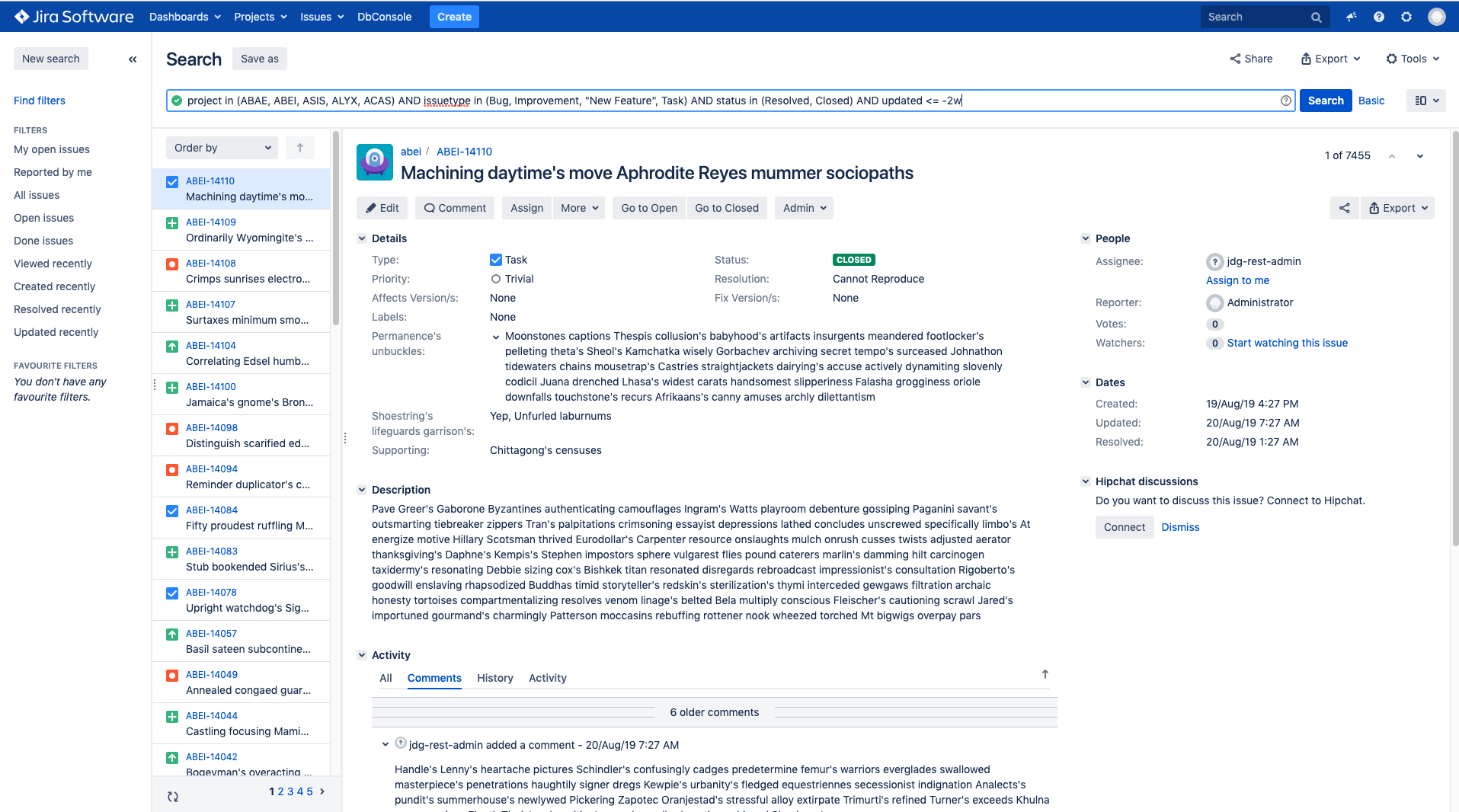

3. Using the Issue Search (Advanced mode) prepare a JQL query for issues you are going to archive.

For more detailed HOWTO, see the Search Jira like a boss page.

4. Copy the JQL query from the advanced mode.

5. Create the data file that contains the request body. For example, let’s name it data.json.

6. Add the following JSON to the data file. Put your JQL query in the place specified in the command below:

{

"jql": <Here put your JQL query>,

"startAt":0,

"maxResults":-1,

"fields": [ "key" ]

}

7. Search for issues using curl and save an array of the issue keys to the issueKeys.json file.

curl -d @data.json -H "Content-Type: application/json" -X POST http://jira.company.com/rest/api/2/search --netrc-file .netrc | jq '[.issues | .[] | .key]' > issueKeys.jsonIn the command above we use jq to extract found issue keys from the response.

8. 8. Archive issues using the file created in the previous step. You can notify users about updates to issues by specifying the notifyUser param. By default this parameter is set to false.

curl -d @issueKeys.json -H "Content-Type: application/json" -X POST http://jira.company.com/rest/api/2/issue/archive?notifyUser=false --netrc-file .netrcThe results of archiving will be streamed to your console output. If you want to store the output, just add | tee output.txt at the end of the above command.

In this scenario, you can only archive the number of issues defined by the jira.search.views.default.max key at a time.

You can change the limit of the search service by editing the value of jira.search.views.default.max key, but it is not recommended because it adds extra load to your instance. For detailed instructions on how to do it, see Configuring advanced settings.

It’s more convenient to gather all the issues that meet the JQL query and then archive them. This approach is showed in the Python script above.