Jira Rate Limiting is not working as configured

Platform Notice: Data Center Only - This article only applies to Atlassian products on the Data Center platform.

Note that this KB was created for the Data Center version of the product. Data Center KBs for non-Data-Center-specific features may also work for Server versions of the product, however they have not been tested. Support for Server* products ended on February 15th 2024. If you are running a Server product, you can visit the Atlassian Server end of support announcement to review your migration options.

*Except Fisheye and Crucible

Summary

When a user tries to test the functionality of Rate Limiting in Jira, they notice that the X-Ratelimit-Remaining stays the same and believe that the functionality is not working

Environment

Jira 8.x , 9.x

Diagnosis

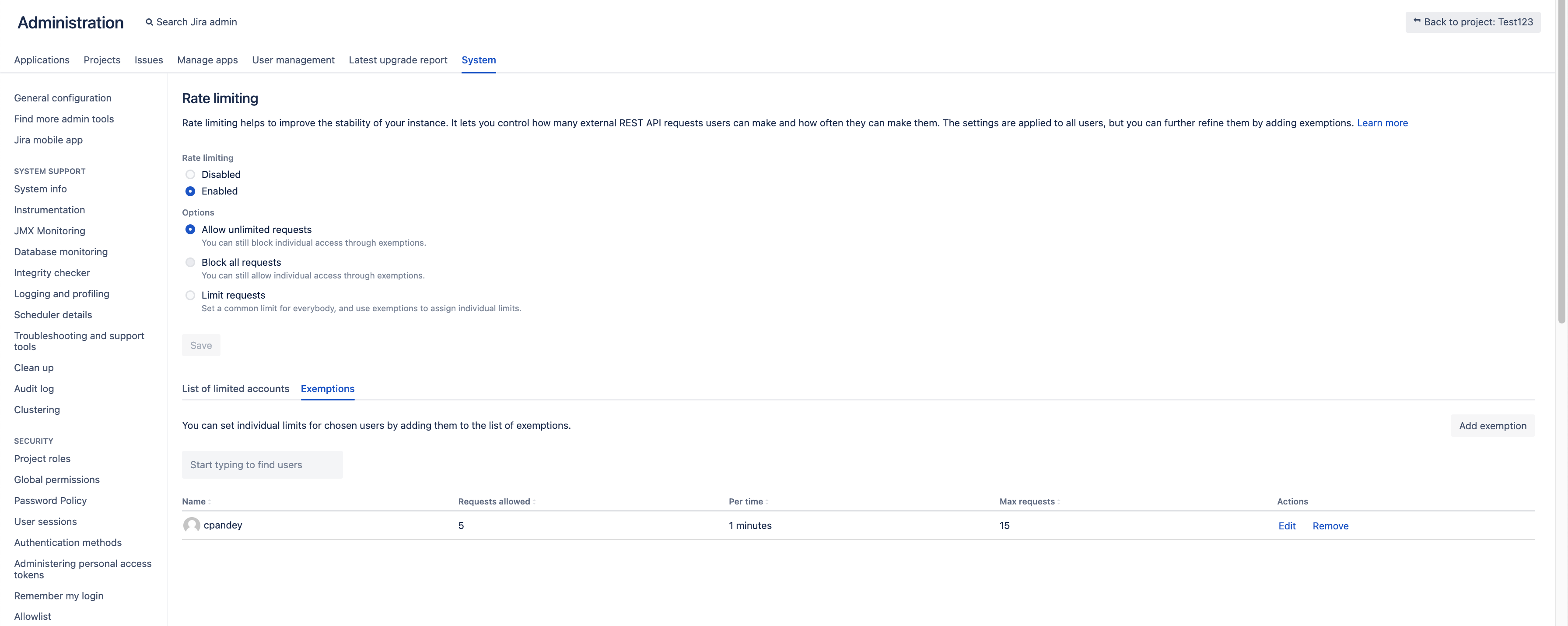

Let us have Rate Limit Configuration like following with exemption for a user. Requests allowed for that user are set to 5 every 1 minute and Max requests that user can send are 15.

Let us invoke Jira via REST operation in a loop (in interval of 1 second) with a counter of 18 which is more than Max requests configured in Rate Limit Configuration.

If we check results we would see that user can send 16 request which are success while Max requests is configured to 15 in a minute.

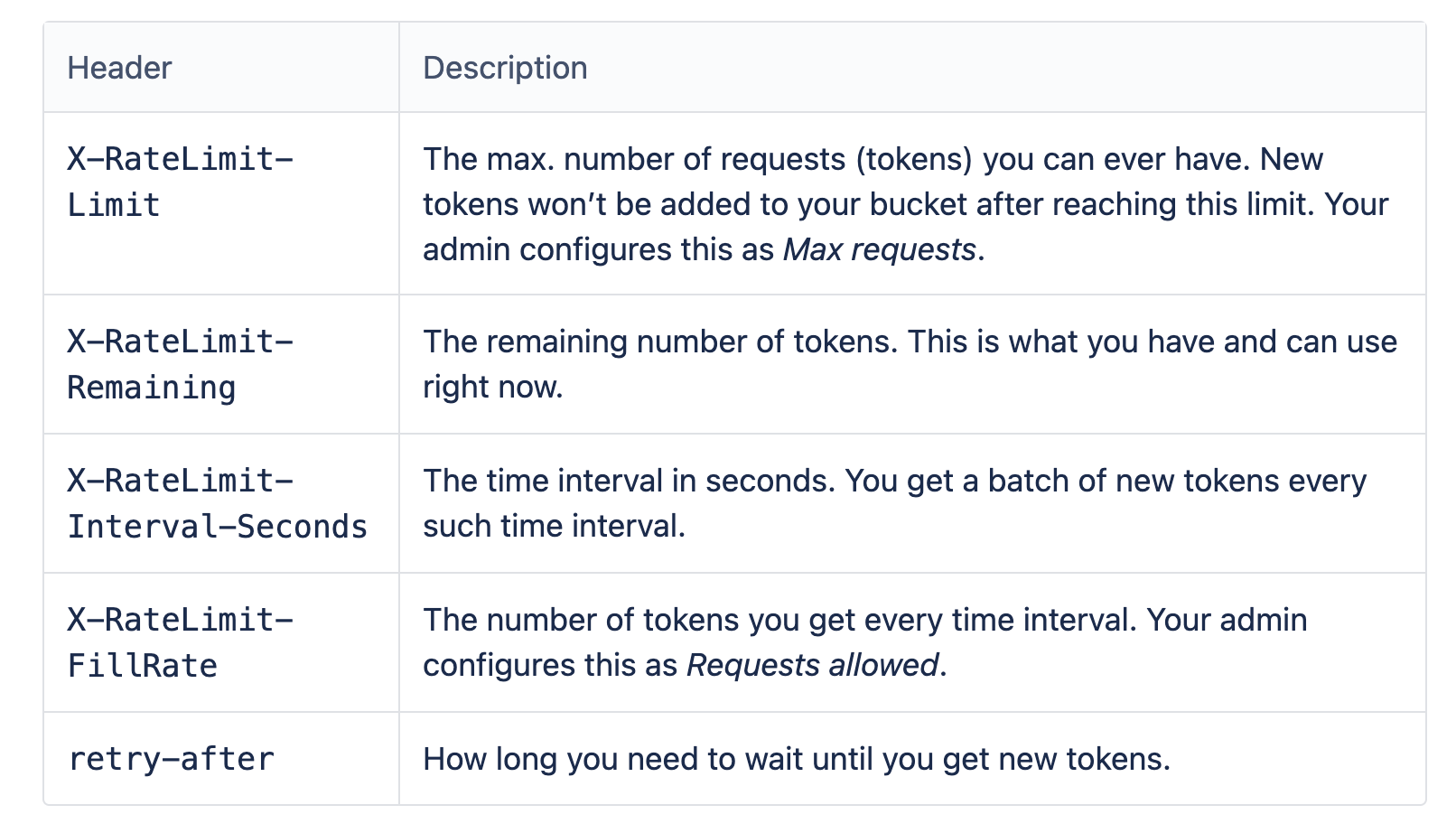

1 2 3 4 5 6 7❯ for i in $(seq 18); do echo ---- $i ----;curl -sI -u cpandey:admin 'http://localhost:8080/rest/api/2/myself';sleep 1;done > header.txt ❯ grep -c "HTTP/1.1 200" header.txt 16 ❯ grep -c "HTTP/1.1 429" header.txt 2The following are Important Response headers we should be aware of. They are well described in the documentation: Improving instance stability with rate limiting.

The reason for this behavior is that the user is receiving a new token in constant intervals. This constant interval is Time interval (in seconds) divided by Request allowed. Thus, every 12 seconds, the user would receive a token that would allow him to send another request successfully.

If we notice the following headers, we find that the X-RateLimit-Remaining response headers for requests 12 and 13 are the same, which means that the user received the first token in 12 seconds since the first request was invoked. Thus he is allowed to send one more request.

Further, if we analyze the 16th request, we find header retry-after is set to 8 seconds, which means the user can try again in 8 seconds to make a successful request. Within 8 seconds, all requests will fail with HTTP status 429 for that user.

Another question could be why retry-after is set to 8 seconds in the 16th request, the answer is that the token was received in the 13th request, and there is a difference of ~4 seconds between the 13th and 16th requests. Thus next token(or user will be allowed to send another success request) would be in 8 + 4 seconds.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91❯ egrep "X-RateLimit-Remaining|HTTP|Retry*|Date|----*" header.txt ---- 1 ---- HTTP/1.1 200 X-RateLimit-Remaining: 14 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:22 GMT ---- 2 ---- HTTP/1.1 200 X-RateLimit-Remaining: 13 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:23 GMT ---- 3 ---- HTTP/1.1 200 X-RateLimit-Remaining: 12 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:24 GMT ---- 4 ---- HTTP/1.1 200 X-RateLimit-Remaining: 11 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:25 GMT ---- 5 ---- HTTP/1.1 200 X-RateLimit-Remaining: 10 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:26 GMT ---- 6 ---- HTTP/1.1 200 X-RateLimit-Remaining: 9 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:27 GMT ---- 7 ---- HTTP/1.1 200 X-RateLimit-Remaining: 8 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:28 GMT ---- 8 ---- HTTP/1.1 200 X-RateLimit-Remaining: 7 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:29 GMT ---- 9 ---- HTTP/1.1 200 X-RateLimit-Remaining: 6 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:30 GMT ---- 10 ---- HTTP/1.1 200 X-RateLimit-Remaining: 5 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:31 GMT ---- 11 ---- HTTP/1.1 200 X-RateLimit-Remaining: 4 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:32 GMT ---- 12 ---- HTTP/1.1 200 X-RateLimit-Remaining: 3 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:33 GMT ---- 13 ---- HTTP/1.1 200 X-RateLimit-Remaining: 3 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:34 GMT ---- 14 ---- HTTP/1.1 200 X-RateLimit-Remaining: 2 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:36 GMT ---- 15 ---- HTTP/1.1 200 X-RateLimit-Remaining: 1 Retry-After: 0 Date: Tue, 11 Apr 2023 13:03:37 GMT ---- 16 ---- HTTP/1.1 200 X-RateLimit-Remaining: 0 Retry-After: 8 Date: Tue, 11 Apr 2023 13:03:38 GMT ---- 17 ---- HTTP/1.1 429 X-RateLimit-Remaining: 0 Retry-After: 6 Date: Tue, 11 Apr 2023 13:03:39 GMT ---- 18 ---- HTTP/1.1 429 X-RateLimit-Remaining: 0 Retry-After: 5 Date: Tue, 11 Apr 2023 13:03:40 GMT

Cause

Since the rate-limiting operates on a token-per-request basis and has a fill rate, you would get an additional token based on the fill rate if you back off and start over. For example, if you have the following setting,

1

2

3

4

X-Ratelimit-Fillrate: 30

X-Ratelimit-Interval-Seconds: 60

X-Ratelimit-Limit: 30

X-Ratelimit-Remaining: 29Now, the fill rate is 30 per 60 seconds, so essentially, 1 token will be provided every 2 seconds when you back off for 2 seconds.

If you send all of them in a burst, you will exhaust your tokens, and no more requests will be allowed. You will receive a 429.

If you back off for some time, based on the fillrate, you will start accumulating tokens to be used for requests.

Irrespective of options of Seconds, Minutes & Hours, Jira Always translates time interval in seconds.

Rate Limit configuration Per time: irrespective of the options of Seconds, Minutes, and Hours, Jira translates the time interval in seconds. Thus, the token is sent to the user at a constant interval(in seconds), which allows him to send the next successful request.

Rate Limit works on token bucket algorithm.

Also note that these Rate limit headers would only be available with authenticated requests, one would not be able to see these headers within response headers of anonymous user.

Solution

Testing whether rate limiting works before getting any traffic can be done as follows with the above rate limit settings.

The first one below will reduce the counter and the last output would show 20 remaining after exhausting the 10 tokens in a burst of requests with no time for filling up of the token for request.

1

for i in $(seq 10); do curl -v -u user:pass http://localhost:8080/rest/api/2/myself ; done The second one below would have refilled the tokens resulting in the same 29 remaining after the request every time.

1

for i in $(seq 10); do curl -v -u user:pass http://localhost:8080/rest/api/2/myself ; sleep 2;doneWas this helpful?